There’s a persistent belief in the ecommerce ecosystem that using a popup to capture email and SMS subscribers hurts site conversion rates or revenue-per-visitor metrics. You’ve probably heard it before: “Popups are annoying,” “They kill conversions,” “Don’t interrupt the customer journey.”

On the surface, this argument seems logical. Popups introduce friction to the customer journey—people have to stop, enter their email address or phone number, and deal with an interruption.

How to Run a Popup Holdout Test

You’ve probably run a popup A/B test before. Your email or SMS platform allows you to test the performance differences between two popups. However, those performance indicators are always reduced to two basics: email and SMS opt-in rates.

The problem with that? It doesn’t tell you anything about the actual revenue impact of the popups.

Some providers report revenue associated with the popup, but they only do it for the segment of your customers who subscribed—so that doesn’t help either.

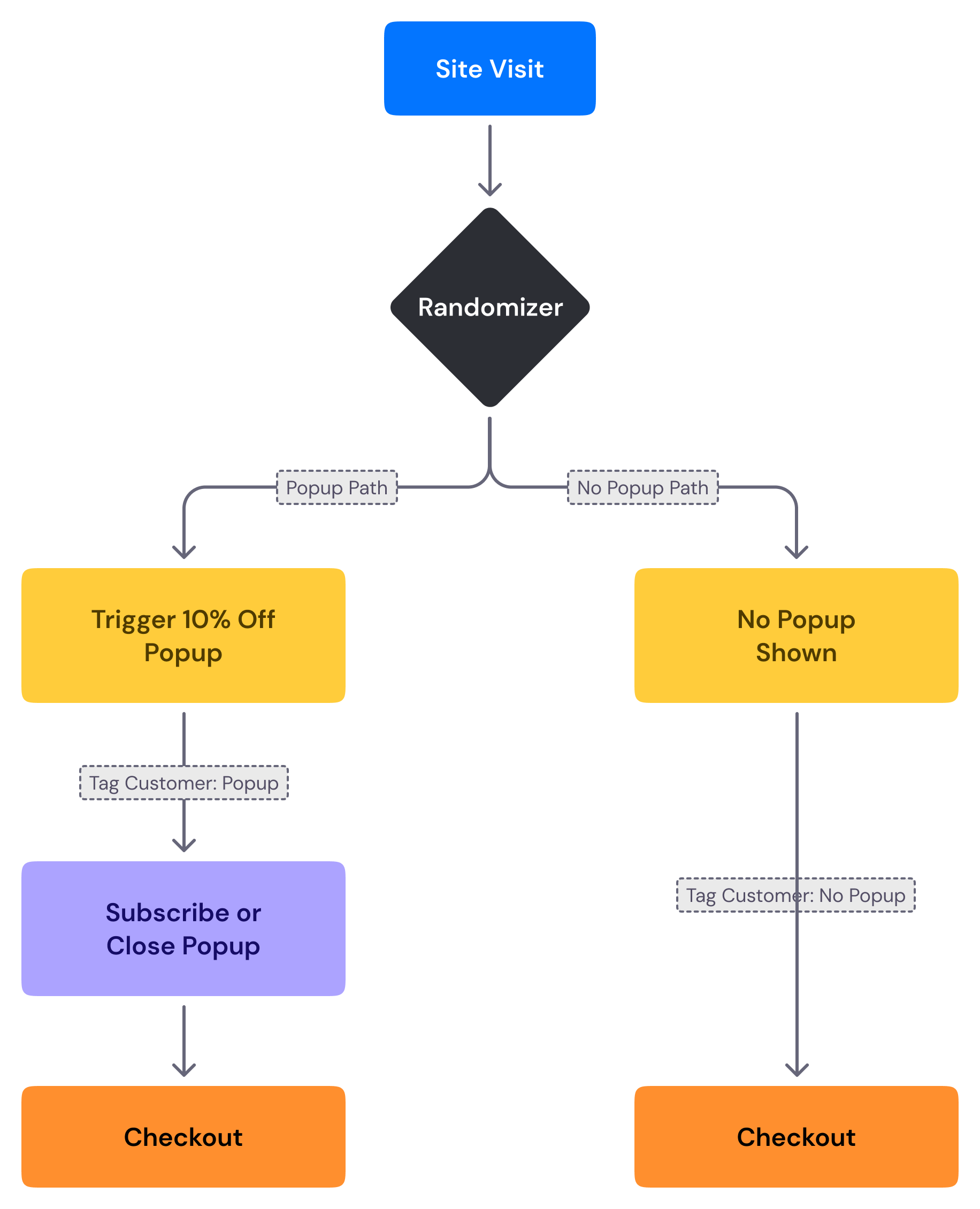

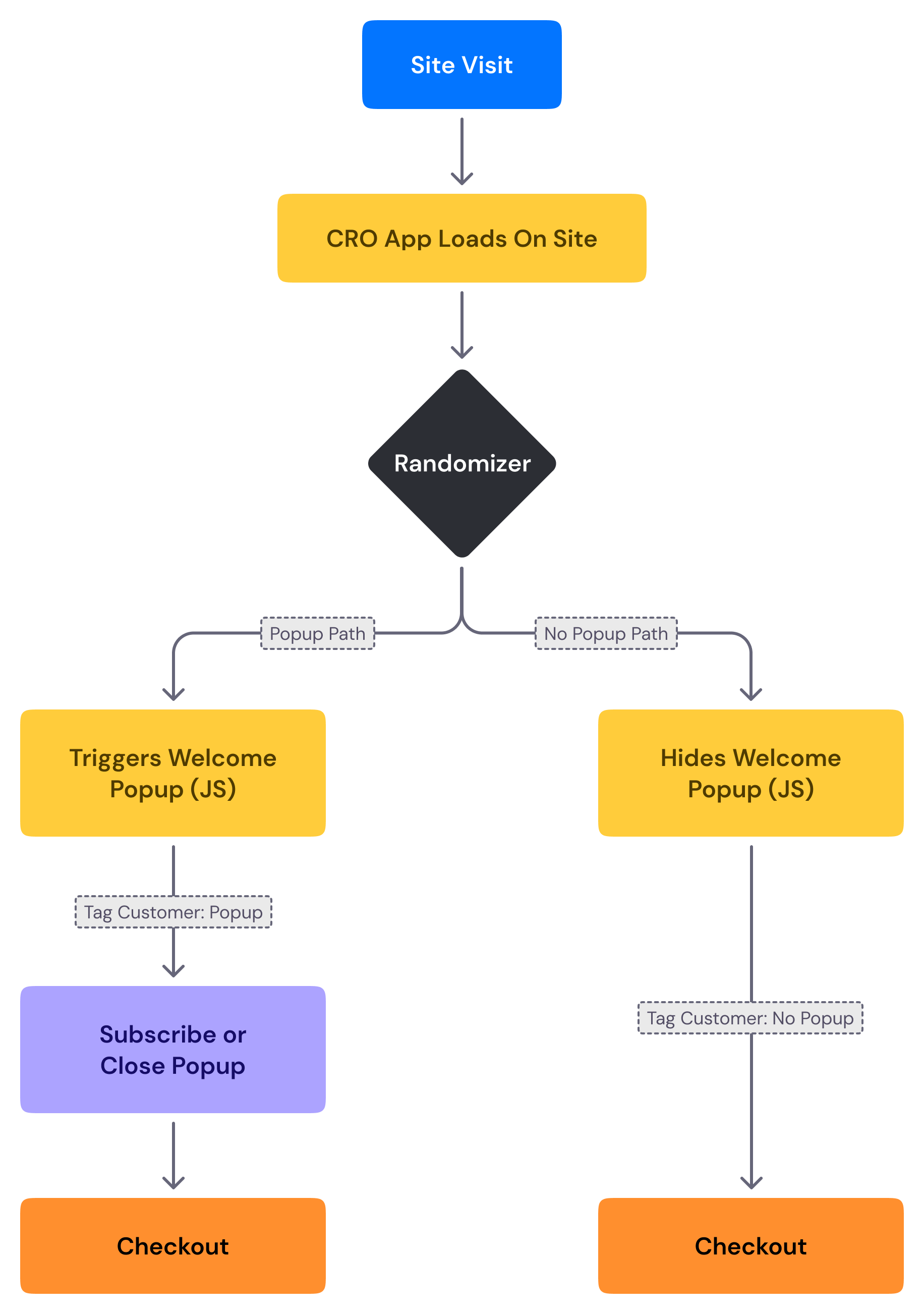

If you want to measure the actual revenue impact of your popups, you need to run a holdout test with a CRO mindset. You need to show the popup to Group A, tag every visitor that arrived in that group, and track visitors in another group, Group B, where people don’t see a popup. You still tag those people and follow the conversion rate, AOV, and revenue per visitor through their customer journey. Something like this:

Once you run this setup, you’ll have visibility not only on email and SMS opt-in rates, but also on revenue, orders, CR, AOV, and most importantly, RPV (Revenue Per Visitor).

You can run these tests using a CRO tool (Intelligems, Convert, Shoplift) connected to your popups (advanced), or use Recart’s Popup Revenue Testing (simple).

Option 1: Use Recart’s Popup Revenue Testing Tool

The simplest approach is to set up a popup vs. no-popup A/B test directly in Recart. Here’s how it works:

- Create two Recart popups: A) Welcome Popup (control) and B) Duplicate of Welcome Popup (variant)

- Set the variant to invisible (this will trigger the popup invisibly)

- Launch an A/B test for these two popups with 50/50 allocation

- Users exposed in both groups are tracked throughout their customer journey

- Revenue is attributed to both the popup group and the no-popup groups

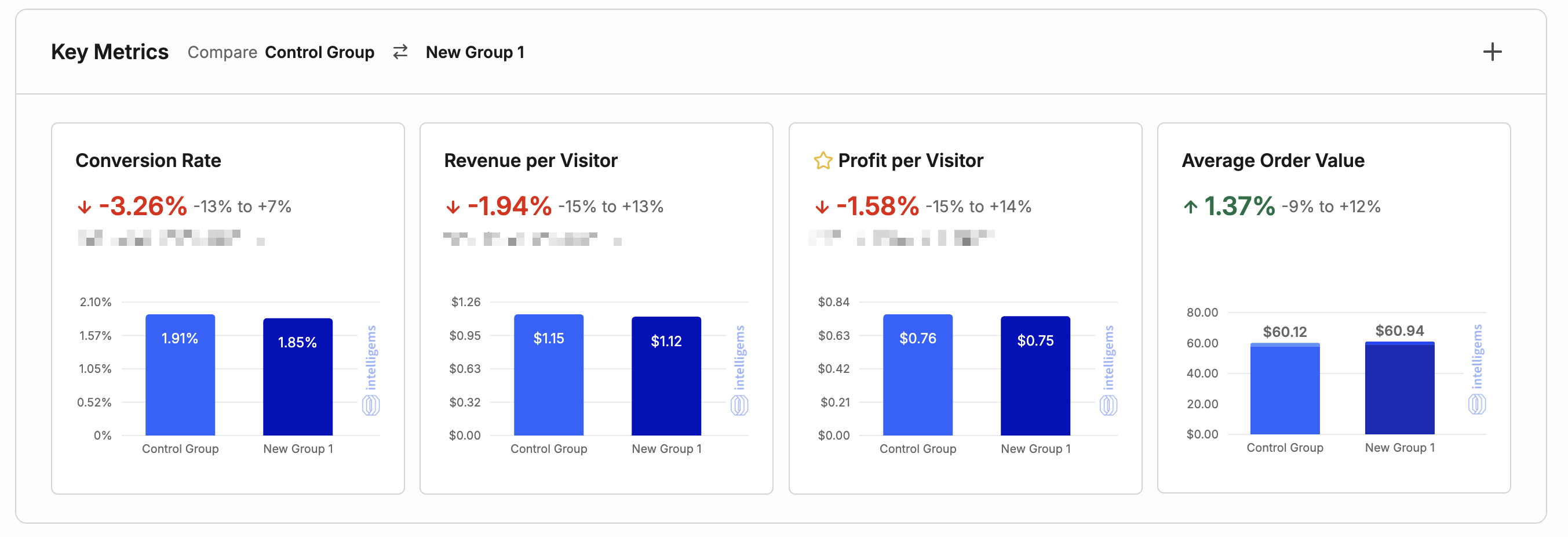

The results overview will look like this: It allows you to perfectly isolate the same-session revenue difference between showing a popup and not showing one.

Option 2: Use Third-Party CRO Tools

For a more advanced setup, you can use revenue measurement tools like Intelligems or Monocle. This requires:

- A reliable way for the CRO tool to call or suppress your popups

- Session tracking across the entire customer journey

- Proper revenue attribution to each test variant

The methodology is the same as the Recart approach—you’re comparing revenue per visitor between those who see the popup and those who don’t.

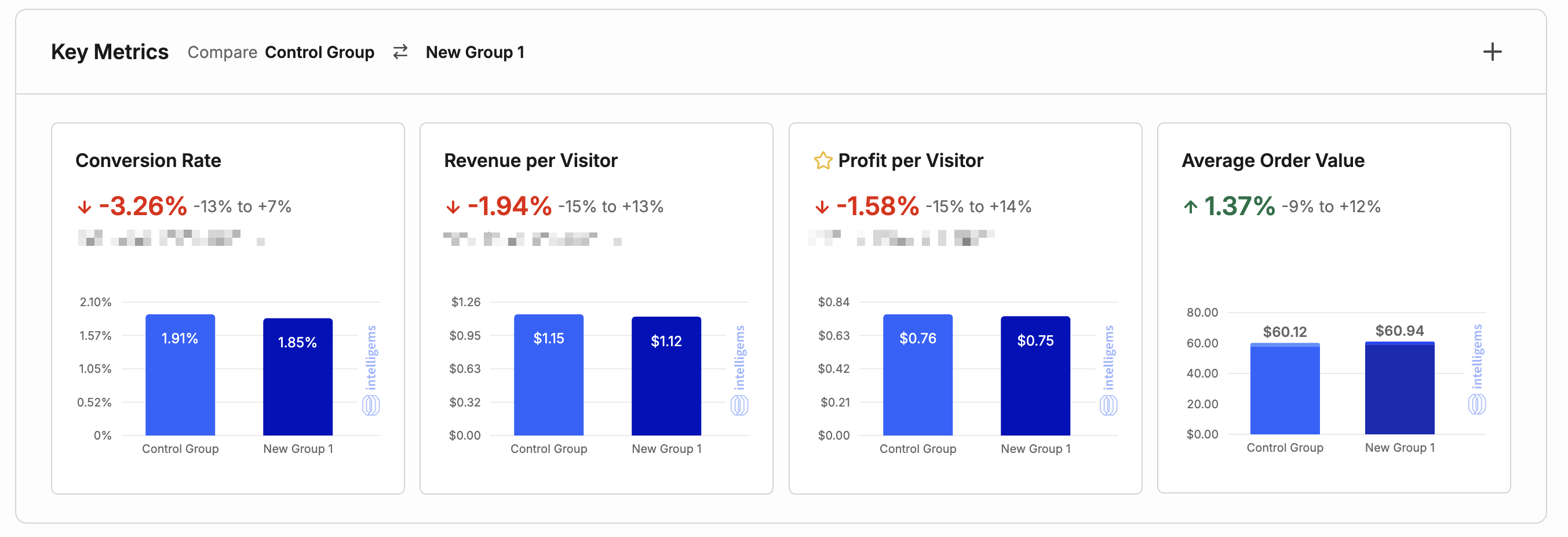

Results will look something like this – an example from Intelligems. Your CRO tool does not measure the email/SMS impact, therefore, you need to track your list growth impact separately here.

The Important Caveat: Same-Session Revenue

Here’s what you need to understand about these tests: they measure same-session revenue.

This means you’re identifying the immediate revenue impact of showing a popup. That’s a good start and answers the question of whether popups hurt your baseline conversion rate.

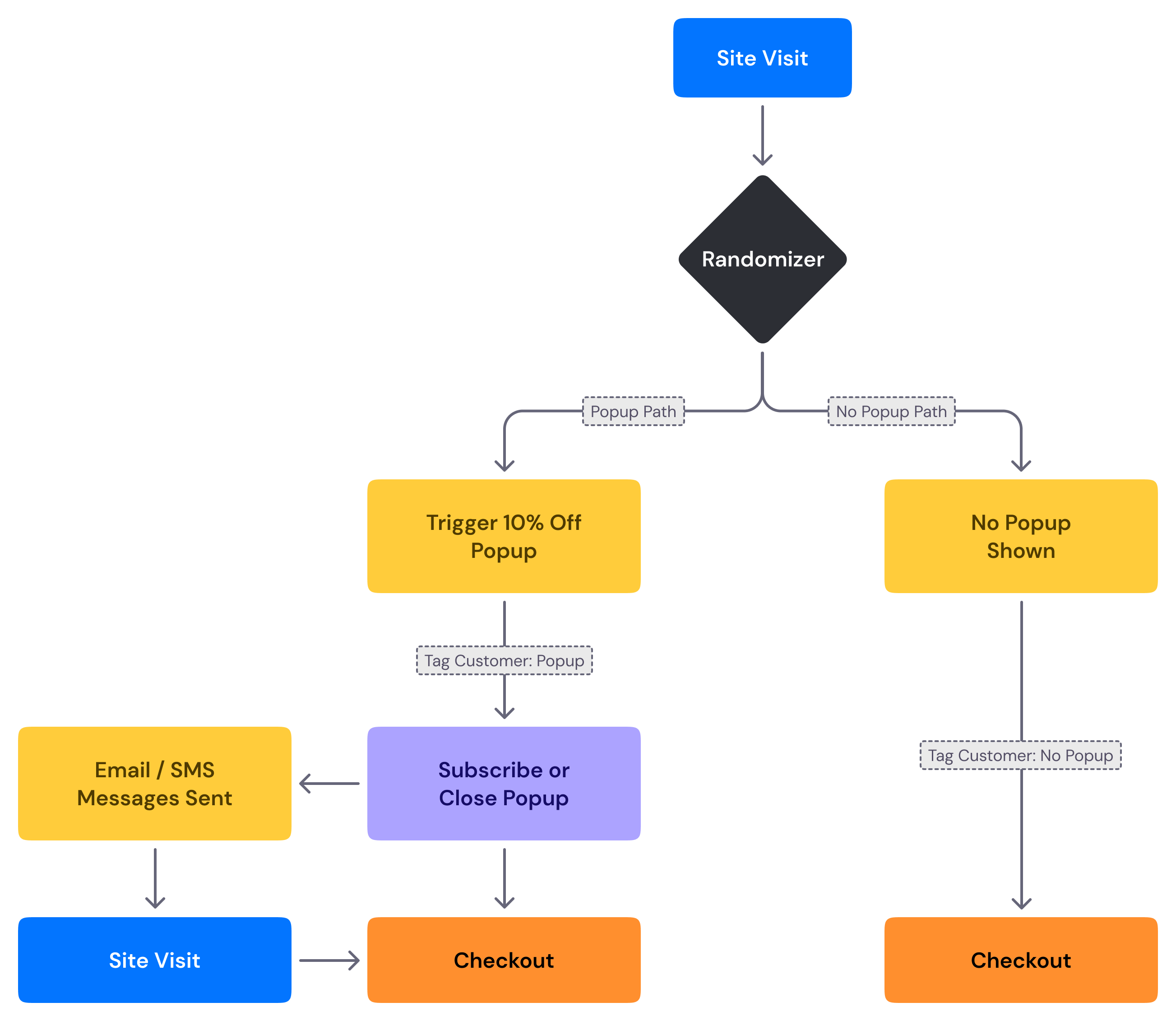

However, with a popup enabled on your site, you’re collecting email and SMS subscribers who will generate second and third-wave revenue through subsequent messages.

In reality, the popup group sends dozens of emails and text campaigns to direct customers back to your website. The flow looks more like this:

While this would be the prudent thing to do, tracking LTV impact introduces new layers of testing complexity, so most brands focus on same-session testing.

What Dozens of Tests Have Revealed

At Recart, we’ve run dozens of popup vs. no-popup incrementality tests across hundreds of brands. Here’s what we’ve found:

In most cases, revenue per visitor was either neutral or actually leaning towards the popup version. We have yet to find a single case where the popup was hugely and provably detrimental to immediate revenue results.

Here are three specific tests to showcase different results.

True Classic

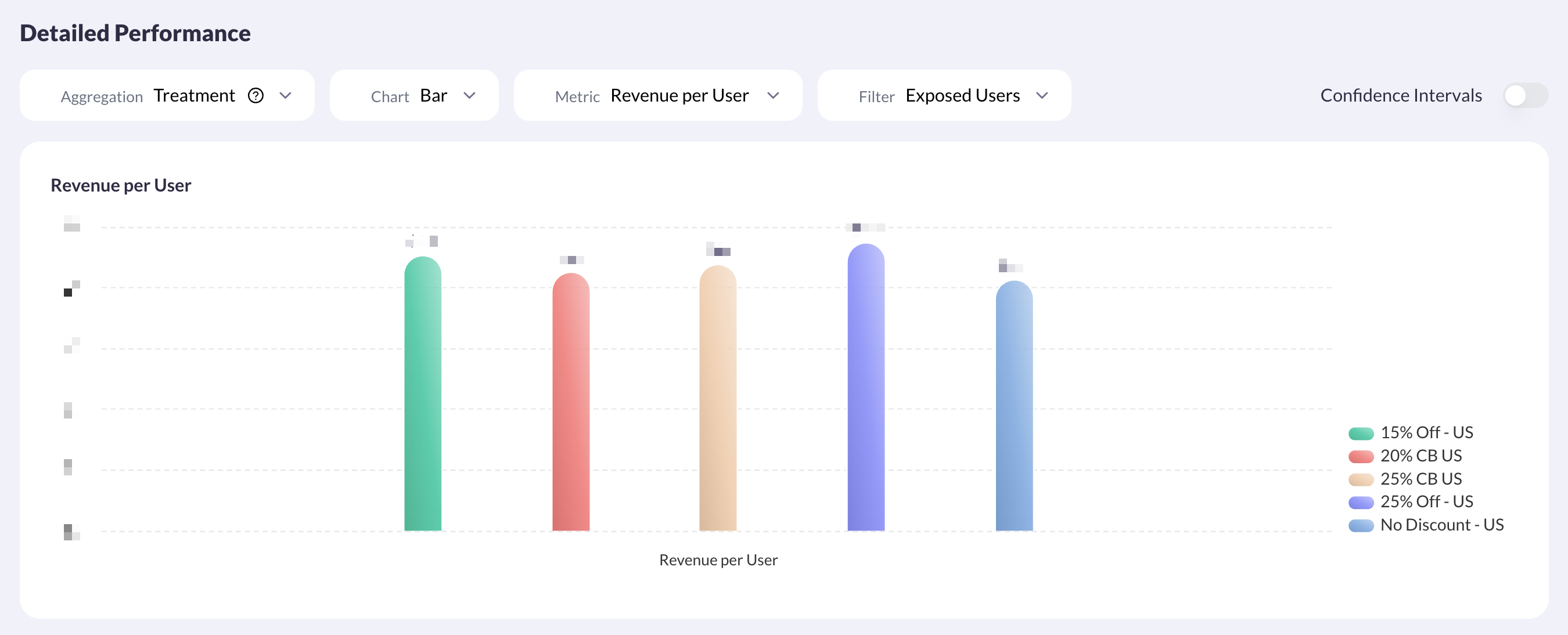

True Classic ran a 5-way offer test using Recart as a pop-up provider and Monocle as a testing platform. They wanted to learn how same-session revenue, per-visitor conversion rates, and gross profit per visitor changed between different offers and no offer or popup at all. They tested five variants, 15% cashback, 20% cashback, 15% off, 25% off, and no offer or popup triggered at all.

Results from Monocle showed 25% off winning on Revenue Per User:

While 20% winning on Gross Profit Per User (the primary metric for True Classic):

- The no popup variant lost in all same-session revenue metrics. True Classic also lost the email/SMS subscribers in that group – no second-wave LTV impact from those channels.

- Cashback offers performed very closely to the no popup revenue metrics. Customers tend to dislike the cashback offers over % discounts.

- 15% and 25% off popups performed highly and incentivized visitors to make a purchase significantly more.

Review their test in our Conversion Lab.

Juvenon

The team at Juvenon was curious to learn whether their popup had any negative revenue impact so we helped them run a test of popup vs no-popup. The control group included the popup, while the test group had no popup triggered.

Results clearly showed that the brand generates slightly more revenue when their popup is on, and they also gain thousands of new email/SMS subscribers every month.

Review their test in our Conversion Lab.

Perfect White Tee

America’s favorite tee brand, scaled to $50M+ by Johnny Hickey (follow him on X) wanted to figure out the same: is it worth running the popups?

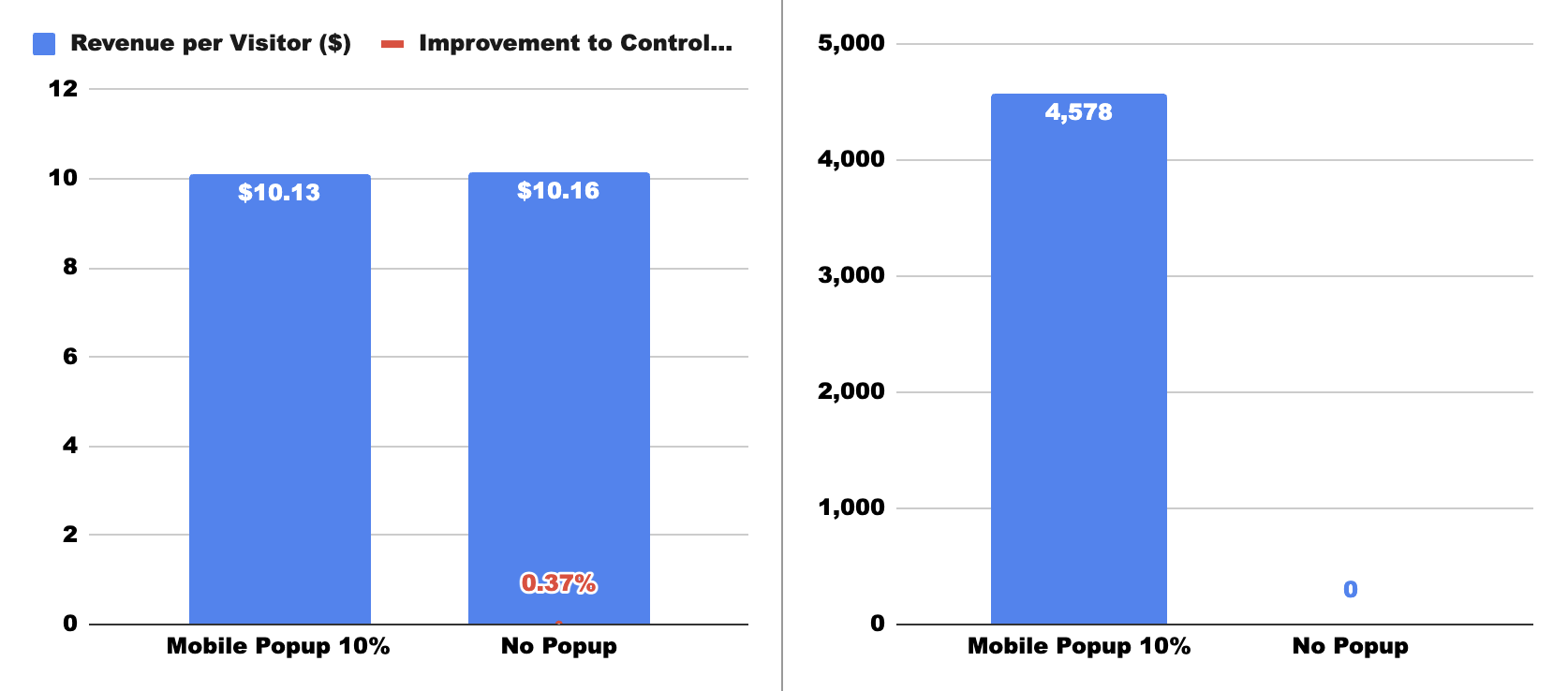

In their case, the 10% off popup had no positive or negative impact on revenue per visitor. Although the popup gave away a 10% discount, conversion rate and AOV increase made up for the delta. At the same time, the popup variant collected more than 4,500 subscribers over the test period.

Does that mean such cases don’t exist? No—they might. But based on what we know today from extensive testing, very, very few brands experience revenue loss from popup usage. For most, it’s either neutral or positive.

Stop Arguing from Opinion – Start Testing

Despite the data, you still see people online vehemently arguing against popups. The problem? Most of them have never properly tested it. They’re forming opinions based on personal habits and preferences, not actual revenue data from their stores.

Before you decide whether popups hurt or help your store, run one of the two tests outlined above. And run it longer than you think. Revenue tests like these usually need at least $1M in revenue to gain statistical power. Don’t let someone else’s opinion—or even your own gut feeling—dictate your strategy when you can measure the actual impact.

The Bottom Line

The “popups hurt conversions” narrative is largely based on assumptions, not data. When you measure properly—tracking revenue by exposure, not just submissions—the story changes dramatically.

Could a popup hurt your specific store? Maybe. I haven’t seen it happen yet. You won’t know until you test it properly. And if you’re like most of the brands we’ve tested, you’ll find that the friction argument doesn’t hold up against the revenue data.

Ready to run your own popup incrementality test? Set up a proper A/B test in Recart with a no-popup control group and see what the data says about your store. Because in ecommerce, opinions are cheap—revenue data isn’t.